4.2.3.8. 模型精度DEBUG工具¶

当QAT/定点模型出现精度问题时,用户可以使用下列介绍的各种工具来分析模型,定位精度掉点问题。

4.2.3.8.1. 相似度对比¶

如果 QAT 模型相比浮点模型、或者定点模型相比浮点模型掉点较多的话,可以使用相似度对比工具比较模型中每一层输出的相似度,快速定位到是具体哪一层或者哪一个 op 导致掉点严重。

# from horizon_plugin_pytorch.utils.quant_profiler import featuremap_similarity

def featuremap_similarity(

model1: torch.nn.Module,

model2: torch.nn.Module,

inputs: Any,

similarity_func: Union[str, Callable] = "Cosine",

threshold: Optional[Real] = None,

devices: Union[torch.device, tuple, None] = None,

out_dir: Optional[str] = None,

)

"""相似度对比函数

参数:

model1:用于计算相似度的模型

model2:用于计算相似度的模型

inputs:模型输入,需和模型forward函数中定义的参数个数一致

similarity_func:计算相似度的方法。默认为余弦相似度“Cosine”。支持如下几种

- "Cosine",余弦相似度

- "MSE",L2距离

- "L1",L1距离

- "KL",KL散度

- "SQNR",信噪比

- Callable func,自定义的相似度计算函数

threshold:阈值。默认为None 。如果用户传进一个数值,按照相似度比较方法的不同,

超过或者小于该阈值的值和对应的op名会在屏幕打印。(若计算方法为余弦相似度

和信噪比,值越大相似度越高;其他方法均是值越小相似度越高)

devices:指定计算相似度时模型在哪个device上进行forward。若为None,则默认在模

型输入时的device上进行forward;若仅有一个参数如torch.device("cpu"),则

会把两个模型均移动到指定的device上forward;若指定了两个值如

(torch.device("cpu"), torch.device("cuda")),则会把两个模型分别移动到

对应的device上forward。一般用于比较同一个模型同一个阶段的CPU/GPU的中间结果。

out_dir: 指定输出的结果文件和图片的路径。默认为None,保存到当前路径。

"""

使用时需注意以下几点:

默认会输出

对应层的相似度

结果的 scale(如果有的话)

结果的最大误差(atol)

单算子误差(单算子误差=结果的最大误差 atol / 结果的 scale)

相同输入情况下结果的单算子误差

其中,单算子误差是指每一层的结果相差多少个 scale,包含了累积误差的影响,因为这一层的输入在前面几层结果偏差的影响下可能已经不一样了;而相同输入下的单算子误差,指的是将这一层输入手动设置成完全一样的,再计算两个模型这一层输出的误差。理论上相同输入下的单算子误差应该都在几个 scale 之内,如果相差很大,则说明该 op 转换可能存在问题导致结果相差很多。

支持任意两阶段的模型以任意输入顺序,在任意两个 device 上比较相似度。推荐按照 float/qat/quantized 的顺序输入,比如(float,qat)(float,quantized)(qat,quantized)这样。如果是(qat,float)的顺序,对相似度和单算子误差没有影响,但是输出的 相同输入下的单算子误差 可能会有偏差,因为无法生成和 float 模型完全对应的输入给 qat 模型。此外,因为 qat 训练之后,模型参数会改变,所以直接比较 float 和训练之后的 qat 模型的相似度参考意义不大,建议比较 float 和经过 calibration 之后且未 forward 的 qat 模型的相似度。

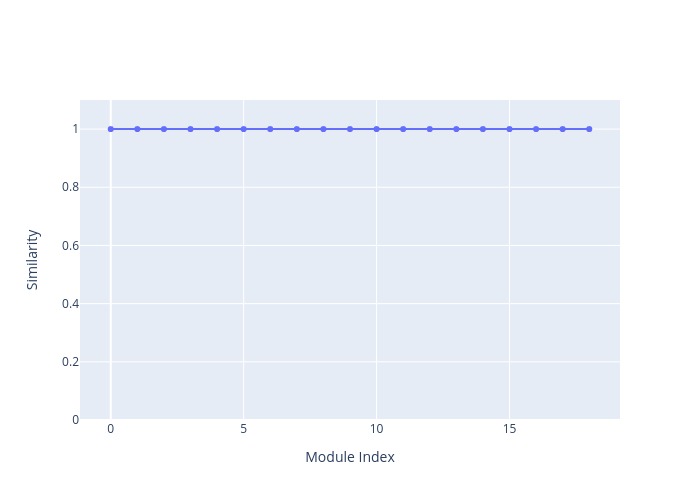

函数会将结果保存到 txt 文件,同时绘制相似度的变化曲线,保存成图片。会生成如下文件:

similarity.txt:按照模型 forward 的顺序打印每一层的相似度和单算子误差等结果

ordered_op_error_similarity.txt:按照相同输入下单算子误差从高到低进行排序的结果,方便用户快速定位是哪个 op 的 convert 误差较大

similarity.html:一个可交互的图片,显示随着模型 forward,每一层相似度的变化曲线。可以放大缩小,光标移动到对应的点可以显示具体的相似度数值。

若模型为多输入,应将多个输入组合成 tuple,传递给 inputs 参数

若某一层的输出全为0,使用余弦相似度计算时相似度结果也是0。此时可以检查一下该层输出是否为全0,或者根据打印的 atol 等指标确认一下输出是否相同。若某一层的输出完全相同,使用信噪比计算相似度时结果为inf。

若

device=None,函数不会做模型和输入的搬运,用户需要保证模型和模型输入均在同一个device上。输出会以“layer名–相似度结果”的格式逐层打印每一层输出的相似度。输出的粒度为 op 级别。

若模块名有后缀’(I)’,表示该 op 在某一个模型中为 Identity

若模块名有后缀’(I vs I)’,表示该 op 在待比较的两个模型中均为 Identity

若模块名有后缀’(i)’ (i >= 1),表示该层为共享 op,且被共享了 i 次,目前是第 i+1 次调用。共享 op 第1次被调用时和其他 op 一样,不带后缀。

4.2.3.8.1.1. 使用示例¶

class Net(nn.Module):

def __init__(self, quant=False, share_op=True):

super(Net, self).__init__()

self.quant_stubx = QuantStub()

self.quant_stuby = QuantStub()

self.mul_op = FloatFunctional()

self.cat_op = FloatFunctional()

self.quantized_ops = nn.Sequential(

nn.ReLU(),

nn.Sigmoid(),

nn.Softmax(),

nn.SiLU(),

horizon_nn.Interpolate(

scale_factor=2, recompute_scale_factor=True

),

horizon_nn.Interpolate(

scale_factor=2.3, recompute_scale_factor=True

),

nn.AvgPool2d(kernel_size=4),

nn.Upsample(scale_factor=1.3, mode="bilinear"),

nn.UpsamplingBilinear2d(scale_factor=0.7),

)

self.dequant_stub = DeQuantStub()

self.float_ops = nn.Sequential(

nn.Tanh(),

nn.LeakyReLU(),

nn.PReLU(),

nn.UpsamplingNearest2d(scale_factor=0.7),

)

self.quant = quant

self.share_op = share_op

def forward(self, x, y):

x = self.quant_stubx(x)

y = self.quant_stuby(y)

z = self.mul_op.mul(x, y)

x = self.cat_op.cat((x, y), dim=1)

if self.share_op:

x = self.cat_op.cat((x, y), dim=1)

x = self.quantized_ops(x)

x = self.dequant_stub(x)

if not self.quant:

x = self.float_ops(x)

return x

set_march(March.BAYES)

device = torch.device("cuda")

float_net = Net(quant=True, share_op=True).to(device)

float_net.qconfig = horizon.quantization.get_default_qat_qconfig()

qat_net = horizon.quantization.prepare_qat(float_net, inplace=False)

qat_net = qat_net.to(device)

data = torch.arange(1 * 3 * 4 * 4) / 100 + 1

data = data.reshape((1, 3, 4, 4))

data = data.to(torch.float32).to(device)

featuremap_similarity(float_net, qat_net, (data, data))

运行后会在当前目录或者out_dir参数指定的目录下生成如下文件:

similarity.txt

---------------------------------------------------------------

Note:

* Suffix '(I)' means this layer is Identity in one model

* Suffix '(I vs I)' means this layer is Identity in both models

* Suffix '(i)'(i >= 1) means this op is shared i times

---------------------------------------------------------------

+---------+----------------------------+----------------------------------------------------------------------------------+--------------+-----------+----------------+------------------+------------------------+

| Index | Module Name | Module Type | Similarity | qscale | Acc Error | Acc Error | Op Error with Same |

| | | | | | (float atol) | (N out_qscale) | Input (N out_qscale) |

|---------+----------------------------+----------------------------------------------------------------------------------+--------------+-----------+----------------+------------------+------------------------|

| 0 | quant_stubx | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | 1.0000000 | 0.0115294 | 0.0000000 | 0 | 0 |

| 1 | quant_stuby | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | 1.0000000 | 0.0115294 | 0.0000000 | 0 | 0 |

| 2 | mul_op | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999989 | 0.0168156 | 0.0168156 | 1 | 1 |

| 3 | cat_op | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999971 | 0.0167490 | 0.0334979 | 2 | 0 |

| 4 | cat_op(1) | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999980 | 0.0167490 | 0.0334979 | 2 | 0 |

| 5 | quantized_ops.0 | <class 'horizon_plugin_pytorch.nn.qat.relu.ReLU'> | 0.9999980 | 0.0167490 | 0.0334979 | 2 | 0 |

| 6 | quantized_ops.1 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000000 | 0.0070079 | 0.0000000 | 0 | 0 |

| 7 | quantized_ops.2.sub | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999999 | 0.0000041 | 0.0000041 | 1 | 1 |

| 8 | quantized_ops.2.exp | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000000 | 0.0000305 | 0.0000305 | 1 | 1 |

| 9 | quantized_ops.2.sum | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 1.0000000 | 0.0002541 | 0.0005081 | 2 | 2 |

| 10 | quantized_ops.2.reciprocal | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000001 | 0.0000037 | 0.0000186 | 5 | 5 |

| 11 | quantized_ops.2.mul | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 1.0000000 | 0.0009545 | 0.0000000 | 0 | 0 |

| 12 | quantized_ops.3 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000000 | 0.0005042 | 0.0000000 | 0 | 0 |

| 13 | quantized_ops.4 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | 1.0000000 | 0.0005042 | 0.0005042 | 1 | 1 |

| 14 | quantized_ops.5 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | 0.9999999 | 0.0005042 | 0.0005042 | 1 | 0 |

| 15 | quantized_ops.6 | <class 'horizon_plugin_pytorch.nn.qat.avg_pool2d.AvgPool2d'> | 0.9999995 | 0.0005022 | 0.0005022 | 1 | 1 |

| 16 | quantized_ops.7 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.Upsample'> | 0.9999998 | 0.0005022 | 0.0005022 | 1 | 0 |

| 17 | quantized_ops.8 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.UpsamplingBilinear2d'> | 1.0000000 | 0.0005022 | 0.0000000 | 0 | 0 |

| 18 | dequant_stub | <class 'horizon_plugin_pytorch.nn.qat.stubs.DeQuantStub'> | 1.0000000 | | 0.0000000 | 0 | 0 |

+---------+----------------------------+----------------------------------------------------------------------------------+--------------+-----------+----------------+------------------+------------------------+

ordered_op_error_similarity.txt

---------------------------------------------------------------

Note:

* Suffix '(I)' means this layer is Identity in one model

* Suffix '(I vs I)' means this layer is Identity in both models

* Suffix '(i)'(i >= 1) means this op is shared i times

---------------------------------------------------------------

+---------+----------------------------+----------------------------------------------------------------------------------+--------------+-----------+----------------+------------------+------------------------+

| Index | Module Name | Module Type | Similarity | qscale | Acc Error | Acc Error | Op Error with Same |

| | | | | | (float atol) | (N out_qscale) | Input (N out_qscale) |

|---------+----------------------------+----------------------------------------------------------------------------------+--------------+-----------+----------------+------------------+------------------------|

| 10 | quantized_ops.2.reciprocal | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000001 | 0.0000037 | 0.0000186 | 5 | 5 |

| 9 | quantized_ops.2.sum | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 1.0000000 | 0.0002541 | 0.0005081 | 2 | 2 |

| 2 | mul_op | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999989 | 0.0168156 | 0.0168156 | 1 | 1 |

| 7 | quantized_ops.2.sub | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999999 | 0.0000041 | 0.0000041 | 1 | 1 |

| 8 | quantized_ops.2.exp | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000000 | 0.0000305 | 0.0000305 | 1 | 1 |

| 13 | quantized_ops.4 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | 1.0000000 | 0.0005042 | 0.0005042 | 1 | 1 |

| 15 | quantized_ops.6 | <class 'horizon_plugin_pytorch.nn.qat.avg_pool2d.AvgPool2d'> | 0.9999995 | 0.0005022 | 0.0005022 | 1 | 1 |

| 0 | quant_stubx | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | 1.0000000 | 0.0115294 | 0.0000000 | 0 | 0 |

| 1 | quant_stuby | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | 1.0000000 | 0.0115294 | 0.0000000 | 0 | 0 |

| 3 | cat_op | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999971 | 0.0167490 | 0.0334979 | 2 | 0 |

| 4 | cat_op(1) | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 0.9999980 | 0.0167490 | 0.0334979 | 2 | 0 |

| 5 | quantized_ops.0 | <class 'horizon_plugin_pytorch.nn.qat.relu.ReLU'> | 0.9999980 | 0.0167490 | 0.0334979 | 2 | 0 |

| 6 | quantized_ops.1 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000000 | 0.0070079 | 0.0000000 | 0 | 0 |

| 11 | quantized_ops.2.mul | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | 1.0000000 | 0.0009545 | 0.0000000 | 0 | 0 |

| 12 | quantized_ops.3 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | 1.0000000 | 0.0005042 | 0.0000000 | 0 | 0 |

| 14 | quantized_ops.5 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | 0.9999999 | 0.0005042 | 0.0005042 | 1 | 0 |

| 16 | quantized_ops.7 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.Upsample'> | 0.9999998 | 0.0005022 | 0.0005022 | 1 | 0 |

| 17 | quantized_ops.8 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.UpsamplingBilinear2d'> | 1.0000000 | 0.0005022 | 0.0000000 | 0 | 0 |

| 18 | dequant_stub | <class 'horizon_plugin_pytorch.nn.qat.stubs.DeQuantStub'> | 1.0000000 | | 0.0000000 | 0 | 0 |

+---------+----------------------------+----------------------------------------------------------------------------------+--------------+-----------+----------------+------------------+------------------------+

similarity.html

4.2.3.8.2. 可视化¶

目前 plugin 支持任意阶段的模型可视化。这里的可视化指的是可视化模型结构,默认导出 onnx,可以使用 netron 查看。

4.2.3.8.2.1. 模型可视化¶

# from horizon_plugin_pytorch.utils.onnx_helper import export_to_onnx, export_quantized_onnx

export_to_onnx(

model,

args,

f,

export_params=True,

verbose=False,

training=TrainingMode.EVAL,

input_names=None,

output_names=None,

operator_export_type=OperatorExportTypes.ONNX_FALLTHROUGH,

opset_version=11,

do_constant_folding=True,

example_outputs=None,

dynamic_axes=None,

enable_onnx_checker=False,

)

export_quantized_onnx(

model,

args,

f,

export_params=True,

verbose=False,

training=TrainingMode.EVAL,

input_names=None,

output_names=None,

operator_export_type=OperatorExportTypes.ONNX_FALLTHROUGH,

opset_version=None,

do_constant_folding=True,

example_outputs=None,

dynamic_axes=None,

keep_initializers_as_inputs=None,

custom_opsets=None,

)

参数的含义和torch.onnx.export保持一致,唯一的区别是参数operator_export_type=OperatorExportTypes.ONNX_FALLTHROUGH

使用时需注意:

浮点模型和 qat 模型导出 onnx 请使用

export_to_onnx定点模型导出 onnx 请使用

export_quantized_onnx可视化的粒度为

plugin 中自定义的 op,包括浮点 op 和定点 op,op 内部的实现不会被可视化

浮点模型中使用的社区 op 的可视化粒度由社区决定

使用示例

set_march(March.BAYES)

device = torch.device("cuda")

float_net = Net(quant=True, share_op=True).to(device)

float_net.qconfig = horizon.quantization.get_default_qat_qconfig()

qat_net = horizon.quantization.prepare_qat(float_net, inplace=False)

qat_net = qat_net.to(device)

quantized_net = horizon.quantization.convert(qat_net, inplace=False)

data = torch.arange(1 * 3 * 4 * 4) / 100 + 1

data = data.reshape((1, 3, 4, 4))

data = data.to(torch.float32).to(device)

export_to_onnx(float_net, (data, data), "float_test.onnx")

export_to_onnx(qat_net, (data, data), "qat_test.onnx")

export_quantized_onnx(quantized_net, (data, data), "quantized_test.onnx")

4.2.3.8.2.2. PT文件可视化¶

支持 torchscript 模型的可视化,需要安装patch过后的netron,即可直接使用 netron 打开 pt 文件。安装方法:

# 手动安装netron

pip install netron>=6.0.2

# 使用plugin中提供的脚本给netron打patch

python -m horizon_plugin_pytorch.utils.patch_netron

4.2.3.8.3. 统计量¶

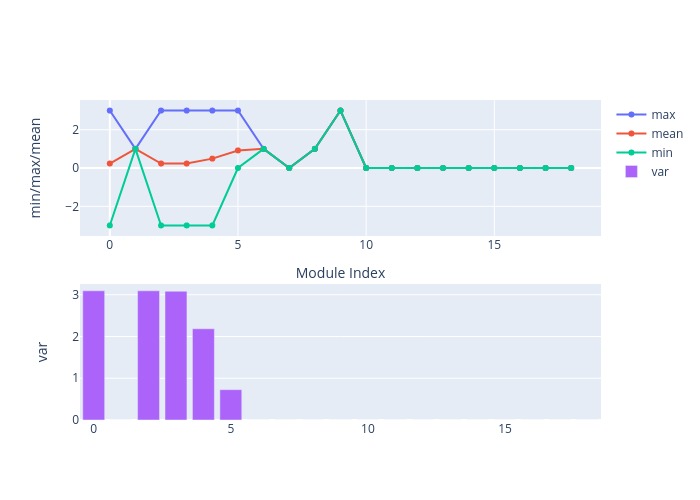

直接计算每一层输入输出的统计量,并输出结果。默认打印 min/max/mean/var/scale。统计量可以帮助用户判断数据分布是否适合量化,并评估需要选用何种量化精度。

# from horizon_plugin_pytorch.utils.quant_profiler import get_raw_features, profile_featuremap

get_raw_features(

model: torch.nn.Module,

example_inputs: Any,

prefixes: Tuple = (),

types: Tuple = (),

device: torch.device = None,

preserve_int: bool = False,

use_class_name: bool = False,

skip_identity: bool = False,

)

"""

参数:

model:需要输出统计量的模型

example_inputs:model的输入

prefixes:指定要输出统计量的op在模型中对应的layer name(以prefixes开头的layer)

types:指定要输出统计量的op的类型

device:指定模型在CPU/GPU上forward

preserve_int:是否以定点数值的形式输出。默认输出为浮点值。该参数仅对qat和定

点模型生效,且只会在该层输出有scale的情况下生效(比如,dequant层输出的结

果是浮点,该参数就不起效果)

use_class_name:是否打印每一层op的name,默认打印的是op的类型

skip_identity:是否跳过Identity op的统计。默认所有类型的op都会输出统计量

输出:

list(dict):返回的是一个列表,列表里的每个元素都是dict,表示每一层的输入输出值和一些参数值,格式如下

- "module_name": (str) 该module在原模型中的名字

- "attr": (str) module的属性。可以是input/output/weight/bias等等。input/output表示这一层的输入/输出,其他的则表示module中的参数

- "data": (Tensor) 该层对应属性的数值。若数据为QTensor,这里记录的是反量化之后的数值

- "scale": (Tensor | None) 若data为QTensor,表示对应的scale,可能是per-tensor量化的scale,也可能是per-channel量化的scale;否则为None

- "ch_axis": (int) 若data为per-channel量化的数据,表示量化的维度。否则为-1

- “ff_method”: (str) 若当前module为FloatFunctional/QFunctional,记录实际调用的method(add/sub/mul/...)。否则为None

"""

profile_featuremap(

featuremap: List[Dict],

with_tensorboard: bool = False,

tensorboard_dir: Optional[str] = None,

print_per_channel_scale: bool = False,

show_per_channel: bool = False,

out_dir: Optional[str] = None,

file_name: Optional[str] = None,

)

"""

输入:

featuremap:get_raw_features的输出

with_tensorboard:是否使用tensorboard显示数据分布。默认False

tensorboard_dir:tensorboard log文件路径。默认None。仅在with_tensorboard=True时有效

print_per_channel_scale:是否打印per channel量化的scale。默认False。

show_per_channel:在tensorboard中是否以per channel的方式显示feature中每个channel的数据直方图。默认为False。

out_dir:指定输出的结果文件和图片的路径。若未指定,则默认保存到当前路径。

file_name:保存的文件和图片的名字。若未指定,默认为“statistic.txt”和一个可交互的“statistic.html”。

"""

使用时需注意:

默认两个接口配合使用

profile_featuremap(get_raw_features(model, example_inputs), with_tensorboard=True)。默认会将统计量结果保存到

statistic.txt,并将结果绘图,保存到statistic.html文件,可用浏览器打开查看若用户需要统计其他信息,可以自定义 featuremap 统计处理函数,处理

get_raw_features函数的返回数据。函数

get_raw_features使用插入 hooks 的方法记录模型每一层的输入输出。但是社区的 hooks 暂时不支持 kwargs(参考这里),这会导致两个问题cat((x,y), 1):这种写法,参数dim=1会被过滤掉,只记录 x 和 y 两个 tensor,这也符合预期cat(x=(x,y), dim=1):这种写法下,两个关键字参数在 hook 运行时不会起作用。目前没有方法处理这样的情况,需要用户自己保证模型 forward 时tensor类型的数据不是以关键字参数的形式传递的。

4.2.3.8.3.1. 使用示例¶

set_march(March.BAYES)

device = torch.device("cuda")

float_net = Net(quant=True, share_op=True).to(device)

float_net.qconfig = horizon.quantization.get_default_qat_qconfig()

qat_net = horizon.quantization.prepare_qat(float_net, inplace=False)

qat_net = qat_net.to(device)

data = torch.arange(1 * 3 * 4 * 4) / 100 + 1

data = data.reshape((1, 3, 4, 4))

data = data.to(torch.float32).to(device)

profile_featuremap(get_raw_features(qat_net, (data, data)), True)

运行后会在当前目录或者out_dir参数指定的目录下生成如下文件:

statistic.txt

+----------------+----------------------------+----------------------------------------------------------------------------------+---------------------+------------+------------+------------+-----------+-----------+

| Module Index | Module Name | Module Type | Input/Output/Attr | Min | Max | Mean | Var | Scale |

|----------------+----------------------------+----------------------------------------------------------------------------------+---------------------+------------+------------+------------+-----------+-----------|

| 0 | quant_stubx | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | input | -2.9995410 | 2.9934216 | -0.0161597 | 3.0371325 | |

| 0 | quant_stubx | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | output | -3.0000000 | 3.0000000 | -0.0133929 | 3.2358592 | 1.0000000 |

| 1 | quant_stuby | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | input | 0.5000594 | 0.9993884 | 0.7544266 | 0.0207558 | |

| 1 | quant_stuby | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | output | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 2 | mul_op[mul] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-0 | -3.0000000 | 3.0000000 | -0.0133929 | 3.2358592 | 1.0000000 |

| 2 | mul_op[mul] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-1 | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 2 | mul_op[mul] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | output | -3.0000000 | 3.0000000 | -0.0133929 | 3.2358592 | 1.0000000 |

| 3 | cat_op[cat] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-0-0 | -3.0000000 | 3.0000000 | -0.0133929 | 3.2358592 | 1.0000000 |

| 3 | cat_op[cat] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-0-1 | -3.0000000 | 3.0000000 | -0.0133929 | 3.2358592 | 1.0000000 |

| 3 | cat_op[cat] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | output | -3.0000000 | 3.0000000 | -0.0133929 | 3.2346549 | 1.0000000 |

| 4 | cat_op(1)[cat] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-0 | -3.0000000 | 3.0000000 | -0.0133929 | 3.2346549 | 1.0000000 |

| 4 | cat_op(1)[cat] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-1 | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 4 | cat_op(1)[cat] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | output | -3.0000000 | 3.0000000 | 0.3244048 | 2.3844402 | 1.0000000 |

| 5 | quantized_ops.0 | <class 'horizon_plugin_pytorch.nn.qat.relu.ReLU'> | input | -3.0000000 | 3.0000000 | 0.3244048 | 2.3844402 | 1.0000000 |

| 5 | quantized_ops.0 | <class 'horizon_plugin_pytorch.nn.qat.relu.ReLU'> | output | 0.0000000 | 3.0000000 | 0.8363096 | 0.7005617 | 1.0000000 |

| 6 | quantized_ops.1 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | input | 0.0000000 | 3.0000000 | 0.8363096 | 0.7005617 | 1.0000000 |

| 6 | quantized_ops.1 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | output | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 7 | quantized_ops.2.sub[sub] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-0 | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 7 | quantized_ops.2.sub[sub] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-1 | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 7 | quantized_ops.2.sub[sub] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 8 | quantized_ops.2.exp | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 8 | quantized_ops.2.exp | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | output | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 9 | quantized_ops.2.sum[sum] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 9 | quantized_ops.2.sum[sum] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | output | 18.0000000 | 18.0000000 | 18.0000000 | 0.0000000 | 1.0000000 |

| 10 | quantized_ops.2.reciprocal | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | input | 18.0000000 | 18.0000000 | 18.0000000 | 0.0000000 | 1.0000000 |

| 10 | quantized_ops.2.reciprocal | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 11 | quantized_ops.2.mul[mul] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-0 | 1.0000000 | 1.0000000 | 1.0000000 | 0.0000000 | 1.0000000 |

| 11 | quantized_ops.2.mul[mul] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input-1 | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 11 | quantized_ops.2.mul[mul] | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 12 | quantized_ops.3 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 12 | quantized_ops.3 | <class 'horizon_plugin_pytorch.nn.qat.segment_lut.SegmentLUT'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 13 | quantized_ops.4 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 13 | quantized_ops.4 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 14 | quantized_ops.5 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 14 | quantized_ops.5 | <class 'horizon_plugin_pytorch.nn.qat.interpolate.Interpolate'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 15 | quantized_ops.6 | <class 'horizon_plugin_pytorch.nn.qat.avg_pool2d.AvgPool2d'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 15 | quantized_ops.6 | <class 'horizon_plugin_pytorch.nn.qat.avg_pool2d.AvgPool2d'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 16 | quantized_ops.7 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.Upsample'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 16 | quantized_ops.7 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.Upsample'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 17 | quantized_ops.8 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.UpsamplingBilinear2d'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 17 | quantized_ops.8 | <class 'horizon_plugin_pytorch.nn.qat.upsampling.UpsamplingBilinear2d'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 18 | dequant_stub | <class 'horizon_plugin_pytorch.nn.qat.stubs.DeQuantStub'> | input | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | 1.0000000 |

| 18 | dequant_stub | <class 'horizon_plugin_pytorch.nn.qat.stubs.DeQuantStub'> | output | 0.0000000 | 0.0000000 | 0.0000000 | 0.0000000 | |

+----------------+----------------------------+----------------------------------------------------------------------------------+---------------------+------------+------------+------------+-----------+-----------+

statistic.html

若设置with_tensorboard=True,则会在指定目录下生成 tensorboard 的 log 文件,可以使用 tensorboard 打开查看。

4.2.3.8.4. 分步量化¶

当遇到 QAT 模型训练困难导致指标上不去的情况时,用户可能需要使用分步量化寻找精度的瓶颈,此时需要通过qconfig=None的方式将模型的某一部分设置为浮点

# from horizon_plugin_pytorch.quantization import prepare_qat

def prepare_qat(

model: torch.nn.Module,

mapping: Optional[Dict[torch.nn.Module, torch.nn.Module]] = None,

inplace: bool = False,

optimize_graph: bool = False,

hybrid: bool = False,

):

"""在prepare_qat接口中通过hybrid参数来开启分布量化

参数:

hybrid: 生成一个中间op是浮点计算的混合模型。其中有一些限制是:

1. 混合模型不能通过check_model也不能编译

2. 某些量化op不能直接接受浮点输入,用户需要手动插入QuantStub

"""

使用时需注意:

量化算子 → 浮点算子:量化算子输出类型为

QTensor,QTensor默认不允许直接作为浮点算子的输入,因此会导致 forward 时出现NotImplementedError报错,为解决这一问题,用户可以使用上述接口放开这个限制浮点算子 → 量化算子:QAT 时的量化算子实现一般为浮点算子+FakeQuant的形式,因此大部分情况下量化算子可以直接使用

Tensor作为输入。由于和定点对齐的需求,少数算子在 QAT 时需要 input 的 scale 信息,因此必须输入QTensor,对于这种情况我们添加了检查,用户若遇到相关报错,需要手动在浮点算子和量化算子之间插入QuantStub

4.2.3.8.4.1. 使用示例¶

import numpy as np

import pytest

import torch

from horizon_plugin_pytorch.march import March, set_march

from horizon_plugin_pytorch.nn import qat

from horizon_plugin_pytorch.quantization import (

get_default_qat_qconfig,

prepare_qat,

)

from torch import nn

from torch.quantization import DeQuantStub, QuantStub

class HyperQuantModel(nn.Module):

def __init__(self, channels=3) -> None:

super().__init__()

self.quant = QuantStub()

self.conv0 = nn.Conv2d(channels, channels, 1)

self.conv1 = nn.Conv2d(channels, channels, 1)

self.conv2 = nn.Conv2d(channels, channels, 1)

self.dequant = DeQuantStub()

def forward(self, input):

x = self.quant(input)

x = self.conv0(x)

x = self.conv1(x)

x = self.conv2(x)

return self.dequant(x)

def set_qconfig(self):

self.qconfig = get_default_qat_qconfig()

self.conv1.qconfig = None

shape = np.random.randint(10, 20, size=4).tolist()

data = torch.rand(size=shape)

model = HyperQuantModel(shape[1])

model.set_qconfig()

set_march(March.BAYES)

qat_model = prepare_qat(model, hybrid=True)

assert isinstance(qat_model.conv0, qat.Conv2d)

# qat模型中conv1仍然是浮点conv

assert isinstance(qat_model.conv1, nn.Conv2d)

assert isinstance(qat_model.conv2, qat.Conv2d)

qat_model(data)

4.2.3.8.5. 共享op检查¶

此接口统计并打印模型在一次 forward 过程中每个 module 被调用的次数,以此检查模型中是否存在共享op。若一个 module 实例在模型中以不同的名字出现了多次,函数会使用第一个名字,且将所有的调用记在这个名字上(用户可以看到相关警告)

# from horizon_plugin_pytorch.utils.quant_profiler import get_module_called_count

def get_module_called_count(

model: torch.nn.Module,

example_inputs,

check_leaf_module: callable = None,

print_tabulate: bool = True,

) -> Dict[str, int]:

"""计算模型中叶子节点的调用次数

参数:

model:模型

example_inputs:模型输入

check_leaf_module:检查module是否是一个叶子节点。默认为None,使用预定义的is_leaf_module,将所有plugin中定义的op以及未支持的浮点op当作为叶子节点。

print_tabulate:是否打印结果。默认为True。

输出:

Dict[str, int]:模型中每一层的name以及对应的调用次数。

"""

4.2.3.8.5.1. 使用示例¶

shape = np.random.randint(10, 20, size=4).tolist()

data0 = torch.rand(size=shape)

data1 = torch.rand(size=shape)

float_net = Net()

get_module_called_count(float_net, (data0, data1))

输出为:

name called times

--------------- --------------

quant_stubx 1

quant_stuby 1

unused 0

mul_op 1

cat_op 2

quantized_ops.0 1

quantized_ops.1 1

quantized_ops.2 1

quantized_ops.3 1

quantized_ops.4 1

quantized_ops.5 1

quantized_ops.6 1

quantized_ops.7 1

quantized_ops.8 1

dequant_stub 1

float_ops.0 1

float_ops.1 1

float_ops.2 1

float_ops.3 1

4.2.3.8.6. Fuse检查¶

模型 Fuse 的正确性包含两方面:

可以 fuse 的算子是否都 fuse 了

已经 fuse 的算子是否正确

该接口只能对第一种情况进行检查,对于第二种情况,请使用相似度对比的工具对 fuse 前后模型的 feature 相似度进行对比,若发现从某一个算子之后所有 feature 的相似度都有问题,则这个算子的 fuse 可能是错误的(fuse 过程会将几个 op 合并为一个,其他位置用 Identity 代替,因此在这些 Identity 的位置出现 feature 相似度低的情况可能是正常的)

该接口仅接受浮点模型输入。

# from horizon_plugin_pytorch.utils.quant_profiler import check_unfused_operations

def check_unfused_operations(

model: torch.nn.Module,

example_inputs,

print_tabulate=True,

):

"""检查模型中是否有可融合但是未融合的op。

该接口只能检查是否有未融合的op。不能检查融合的正确性,若要检查op融合是否正确,请使用`featuremap_similarity`接口比较fuse前后两个模型的相似度。

参数:

model:输入模型

example_inputs:模型输入参数

print_tabulate:是否打印结果。默认为True。

输出:

List[List[str]]:可融合的op pattern列表

"""

4.2.3.8.6.1. 使用示例¶

import horizon_plugin_pytorch as horizon

import numpy as np

import torch

from horizon_plugin_pytorch import nn as horizon_nn

from horizon_plugin_pytorch.march import March, set_march

from horizon_plugin_pytorch.nn.quantized import FloatFunctional

from horizon_plugin_pytorch.utils.quant_profiler import (

check_unfused_operations,

featuremap_similarity,

get_module_called_count,

get_raw_features,

profile_featuremap,

profile_module_constraints,

)

from torch import nn

from torch.quantization import DeQuantStub, QuantStub

class Conv2dModule(nn.Module):

def __init__(

self,

in_channels,

out_channels,

kernel_size=1,

stride=1,

padding=0,

dilation=1,

groups=1,

bias=True,

padding_mode="zeros",

):

super().__init__()

self.conv2d = nn.Conv2d(

in_channels,

out_channels,

kernel_size,

stride,

padding,

dilation,

groups,

bias,

padding_mode,

)

self.add = FloatFunctional()

self.bn_mod = nn.BatchNorm2d(out_channels)

self.relu_mod = nn.ReLU()

def forward(self, x, y):

x = self.conv2d(x)

x = self.bn_mod(x)

x = self.add.add(x, y)

x = self.relu_mod(x)

return x

def fuse_model(self):

from horizon_plugin_pytorch.quantization import fuse_known_modules

fuse_list = ["conv2d", "bn_mod", "add", "relu_mod"]

torch.quantization.fuse_modules(

self,

fuse_list,

inplace=True,

fuser_func=fuse_known_modules,

)

class TestFuseNet(nn.Module):

def __init__(self, channels) -> None:

super().__init__()

self.convmod1 = Conv2dModule(channels, channels)

self.convmod2 = Conv2dModule(channels, channels)

self.convmod3 = Conv2dModule(channels, channels)

self.shared_conv = nn.Conv2d(channels, channels, 1)

self.bn1 = nn.BatchNorm2d(channels)

self.bn2 = nn.BatchNorm2d(channels)

self.sub = FloatFunctional()

self.relu = nn.ReLU

def forward(self, x, y):

x = self.convmod1(x, y)

x = self.convmod2(y, x)

x = self.convmod3(x, y)

x = self.shared_conv(x)

x = self.bn1(x)

y = self.shared_conv(y)

y = self.bn2(y)

x = self.sub.sub(x, y)

x = self.relu(x)

return x

def fuse_model(self):

self.convmod1.fuse_model()

self.convmod3.fuse_model()

shape = np.random.randint(10, 20, size=4).tolist()

data0 = torch.rand(size=shape)

data1 = torch.rand(size=shape)

float_net = TestFuseNet(shape[1])

float_net.fuse_model()

check_unfused_operations(float_net, (data0, data1))

输出结果为

name type

------------------- ------------------------------------------------

shared_conv(shared) <class 'torch.nn.modules.conv.Conv2d'>

bn1 <class 'torch.nn.modules.batchnorm.BatchNorm2d'>

name type

------------------- ------------------------------------------------

shared_conv(shared) <class 'torch.nn.modules.conv.Conv2d'>

bn2 <class 'torch.nn.modules.batchnorm.BatchNorm2d'>

name type

----------------- --------------------------------------------------------------------------------

convmod2.conv2d <class 'torch.nn.modules.conv.Conv2d'>

convmod2.bn_mod <class 'torch.nn.modules.batchnorm.BatchNorm2d'>

convmod2.add <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'>

convmod2.relu_mod <class 'torch.nn.modules.activation.ReLU'>

4.2.3.8.7. 单算子转换精度调试¶

在出现 QAT 转定点精度降低的情况时,用户可能需要通过将定点模型中的部分重点 op 替换为 QAT 的方式来验证具体是哪个算子造成了转换掉点.

# from horizon_plugin_pytorch.utils.quant_profiler import set_preserve_qat_mode

def set_preserve_qat_mode(model: nn.Module, prefixes=(), types=(), value=True):

"""

通过设置mod.preserve_qat_mode=True,使得转换后的定点模型中mod仍然为qat状态。

参数:

model:需要输出统计量的模型

prefixes:指定要输出统计量的op在模型中对应的layer name(以prefixes开头的layer)

types:指定要输出统计量的op的类型

value:设置preserve_qat_mode=value。默认为True

"""

4.2.3.8.7.1. 使用示例¶

model = TestFuseNet()

model.fuse_model()

model.qconfig = get_default_qat_qconfig()

# 可以调用接口设置,也可以手动指定preserve_qat_mode=True

set_preserve_qat_mode(float_net, ("convmod1"), ())

model.convmod1.preserve_qat_mode = True

set_march(March.BAYES)

horizon.quantization.prepare_qat(model, inplace=True)

quant_model = horizon.quantization.convert(model.eval(), inplace=False)

# 定点模型中convmod1.add仍然为qat.ConvAddReLU2d

assert isinstance(quant_model.convmod1.add, qat.ConvAddReLU2d)

4.2.3.8.8. 量化配置检查¶

检查 qat 模型中每一层 op 的量化配置。输入必须为qat模型。输出结果会保存到qconfig_info.txt文件

# from horizon_plugin_pytorch.utils.quant_profiler import check_qconfig

def check_qconfig(

model: torch.nn.Module,

example_inputs: Any,

prefixes: Tuple = (),

types: Tuple = (),

custom_check_func: Optional[Callable] = None,

out_dir: Optional[str] = None,

):

"""检查QAT模型量化配置。

该函数会

1)检查模型中每一层的输出activation和weight的量化配置。配置信息会保存在`qconfig_info.txt`中。

2)检查模型中每一层的输入输出类型

默认情况下,函数在检查到下列情况时会打印提示信息。

1)输出层activation没有量化

2)固定scale

3)非int8量化的weight(目前仅支持int8量化的weight)

4)模型输入输出类型不一样

如果要检查更多的信息,用户可以通过`custom_check_func`传入自定义的检查函数

参数:

model:输入模型,必须为qat模型

example_inputs:模型输入

prefixes:指定要检查量化配置的op在模型中对应的layer name(以prefixes开头的layer)

types:指定要检查量化配置的op的类型

custom_check_func:用户自定义函数,用于检查其他信息。这个函数在module的hook中调用,因此需要定义为如下格式:

func(module, input, output) -> None

out_dir:保存结果文件`qconfig_info.txt`的路径。若为None,则默认保存在当前路径。

"""

4.2.3.8.8.1. 使用示例¶

float_net = TestFuseNet(3)

float_net.fuse_model()

set_march(March.BAYES)

# 手动构造不支持的或特殊的cases

float_net.qconfig = get_default_qat_qconfig(weight_dtype="qint16")

float_net.sub.qconfig = get_default_qat_qconfig(

activation_qkwargs={

"observer": FixedScaleObserver,

"scale": 1 / 2 ** 15,

"dtype": "qint16",

}

)

qat_net = prepare_qat(float_net)

check_qconfig(qat_net, self.data_list)

输出结果

qconfig_info.txt

Each layer out qconfig:

+-----------------+----------------------------------------------------------------------------------+--------------------+-------------+---------------+-----------+

| Module Name | Module Type | Input dtype | out dtype | per-channel | ch_axis |

|-----------------+----------------------------------------------------------------------------------+--------------------+-------------+---------------+-----------|

| quantx | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | [torch.float32] | qint8 | False | -1 |

| quanty | <class 'horizon_plugin_pytorch.nn.qat.stubs.QuantStub'> | [torch.float32] | qint8 | False | -1 |

| convmod1.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | ['qint8', 'qint8'] | qint8 | False | -1 |

| convmod2.conv2d | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | ['qint8'] | qint8 | False | -1 |

| convmod2.bn_mod | <class 'horizon_plugin_pytorch.nn.qat.batchnorm.BatchNorm2d'> | ['qint8'] | qint8 | False | -1 |

| convmod2.add | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | ['qint8', 'qint8'] | qint8 | False | -1 |

| convmod3.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | ['qint8', 'qint8'] | qint8 | False | -1 |

| shared_conv | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | ['qint8'] | qint8 | False | -1 |

| bn1 | <class 'horizon_plugin_pytorch.nn.qat.batchnorm.BatchNorm2d'> | ['qint8'] | qint8 | False | -1 |

| shared_conv(1) | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | ['qint8'] | qint8 | False | -1 |

| bn2 | <class 'horizon_plugin_pytorch.nn.qat.batchnorm.BatchNorm2d'> | ['qint8'] | qint8 | False | -1 |

| sub | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | ['qint8', 'qint8'] | qint16 | False | -1 |

+-----------------+----------------------------------------------------------------------------------+--------------------+-------------+---------------+-----------+

Weight qconfig:

+-----------------+--------------------------------------------------------------+----------------+---------------+-----------+

| Module Name | Module Type | weight dtype | per-channel | ch_axis |

|-----------------+--------------------------------------------------------------+----------------+---------------+-----------|

| convmod1.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 | True | 0 |

| convmod2.conv2d | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 | True | 0 |

| convmod3.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 | True | 0 |

| shared_conv | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 | True | 0 |

| shared_conv(1) | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 | True | 0 |

+-----------------+--------------------------------------------------------------+----------------+---------------+-----------+

Please check if these OPs qconfigs are expected..

+-----------------+----------------------------------------------------------------------------------+------------------------------------------------------------------+

| Module Name | Module Type | Msg |

|-----------------+----------------------------------------------------------------------------------+------------------------------------------------------------------|

| convmod1.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 weight!!! |

| convmod2.conv2d | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| convmod3.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 weight!!! |

| shared_conv | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| shared_conv(1) | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| sub | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | Fixed scale 3.0517578125e-05 |

| sub | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input dtype ['qint8', 'qint8'] is not same with out dtype qint16 |

+-----------------+----------------------------------------------------------------------------------+------------------------------------------------------------------+

屏幕输出

Please check if these OPs qconfigs are expected..

+-----------------+----------------------------------------------------------------------------------+------------------------------------------------------------------+

| Module Name | Module Type | Msg |

|-----------------+----------------------------------------------------------------------------------+------------------------------------------------------------------|

| convmod1.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 weight!!! |

| convmod2.conv2d | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| convmod3.add | <class 'horizon_plugin_pytorch.nn.qat.conv2d.ConvAddReLU2d'> | qint16 weight!!! |

| shared_conv | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| shared_conv(1) | <class 'horizon_plugin_pytorch.nn.qat.conv2d.Conv2d'> | qint16 weight!!! |

| sub | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | Fixed scale 3.0517578125e-05 |

| sub | <class 'horizon_plugin_pytorch.nn.quantized.functional_modules.FloatFunctional'> | input dtype ['qint8', 'qint8'] is not same with out dtype qint16 |

+-----------------+----------------------------------------------------------------------------------+------------------------------------------------------------------+

4.2.3.8.9. 模型weight比较¶

该接口默认会计算模型中每一层 weight 的相似度(如果有的话),默认会输出到屏幕同时保存到文件。用户也可以通过设置with_tensorboard=True,绘制 weight 的直方图,方便更直观地比较。

# from horizon_plugin_pytorch.utils.quant_profiler import compare_weights

def compare_weights(

float_model: torch.nn.Module,

qat_quantized_model: torch.nn.Module,

similarity_func="Cosine",

with_tensorboard: bool = False,

tensorboard_dir: Optional[str] = None,

out_dir: Optional[str] = None,

) -> Dict[str, Dict[str, torch.Tensor]]:

"""比较float/qat/quantized模型的weights。

该函数使用torch.quantization._numeric_suite.compare_weights比较模型中每一层的

weight。weight相似度和atol将会打印到屏幕同时保存到“weight_comparison.txt”。

用户还可以设置with_tensorboard=True,将weight直方图通过tensorboard打印。

参数:

float_model: 浮点模型

qat_quantized_model: qat/定点模型

similarity_func: 相似度计算函数。支持 Cosine/MSE/L1/KL/SQNR 和任意用户自定

义的相似度计算函数。如果是自定义的函数,须返回标量或者仅含一个数的tensor,

否则结果显示可能不符合预期。默认为Cosine。

with_tensorboard: 是否使用tensorboard,默认为False。

tensorboard_dir: tensorboard日志文件路径。默认为None。

out_dir: 保存txt结果的路径。默认为None, 保存到当前路径。

输出:

一个记录两个模型weight的dict,格式如下:

* KEY (str): module名 (如 layer1.0.conv.weight)

* VALUE (dict): 两个模型中对应层的weight:

"float": 浮点模型中的weight

"quantized": qat/定点模型中的weight

"""

4.2.3.8.9.1. 使用示例¶

float_net = Resnet18().to(device)

float_net.fuse_model()

set_march(March.BAYES)

float_net.qconfig = get_default_qat_qconfig()

qat_net = prepare_qat(float_net)

qat_net(data)

quantized_net = convert(qat_net)

quantized_net(data)

compare_weights(float_net, qat_net)

会同时在屏幕输出并在weight_comparsion.txt中保存结果。

+-------------------------------------+--------------+-----------+

| Weight Name | Similarity | Atol |

|-------------------------------------+--------------+-----------|

| conv1.conv.weight | 1.0000000 | 0.0000000 |

| layer1.0.conv_cell1.conv.weight | 1.0000000 | 0.0000000 |

| layer1.0.shortcut.conv.weight | 1.0000000 | 0.0000000 |

| layer1.0.conv_cell2.skip_add.weight | 1.0000000 | 0.0000000 |

| layer1.1.conv_cell1.conv.weight | 1.0000000 | 0.0000000 |

| layer1.1.conv_cell2.conv.weight | 1.0000000 | 0.0000000 |

| layer2.0.conv_cell1.conv.weight | 1.0000000 | 0.0000000 |

| layer2.0.shortcut.conv.weight | 1.0000000 | 0.0000000 |

| layer2.0.conv_cell2.skip_add.weight | 1.0000000 | 0.0000000 |

| layer2.1.conv_cell1.conv.weight | 1.0000000 | 0.0000001 |

| layer2.1.conv_cell2.conv.weight | 1.0000000 | 0.0000001 |

| layer3.0.conv_cell1.conv.weight | 1.0000000 | 0.0000001 |

| layer3.0.shortcut.conv.weight | 1.0000000 | 0.0000001 |

| layer3.0.conv_cell2.skip_add.weight | 1.0000000 | 0.0000002 |

| layer3.1.conv_cell1.conv.weight | 1.0000000 | 0.0000005 |

| layer3.1.conv_cell2.conv.weight | 1.0000001 | 0.0000008 |

| conv2.conv.weight | 1.0000001 | 0.0000010 |

| pool.conv.weight | 0.9999999 | 0.0000024 |

| fc.weight | 1.0000000 | 0.0000172 |

+-------------------------------------+--------------+-----------+